Alibaba Group has introduced ‘Animate Anyone’, a tool that transforms static images into character videos. What does this mean for the world of digital content? Let’s break it down.

If so many tools for creating images, making videos with AI and having animated avatars are starting to overwhelm you, sit back and listen to what’s coming.

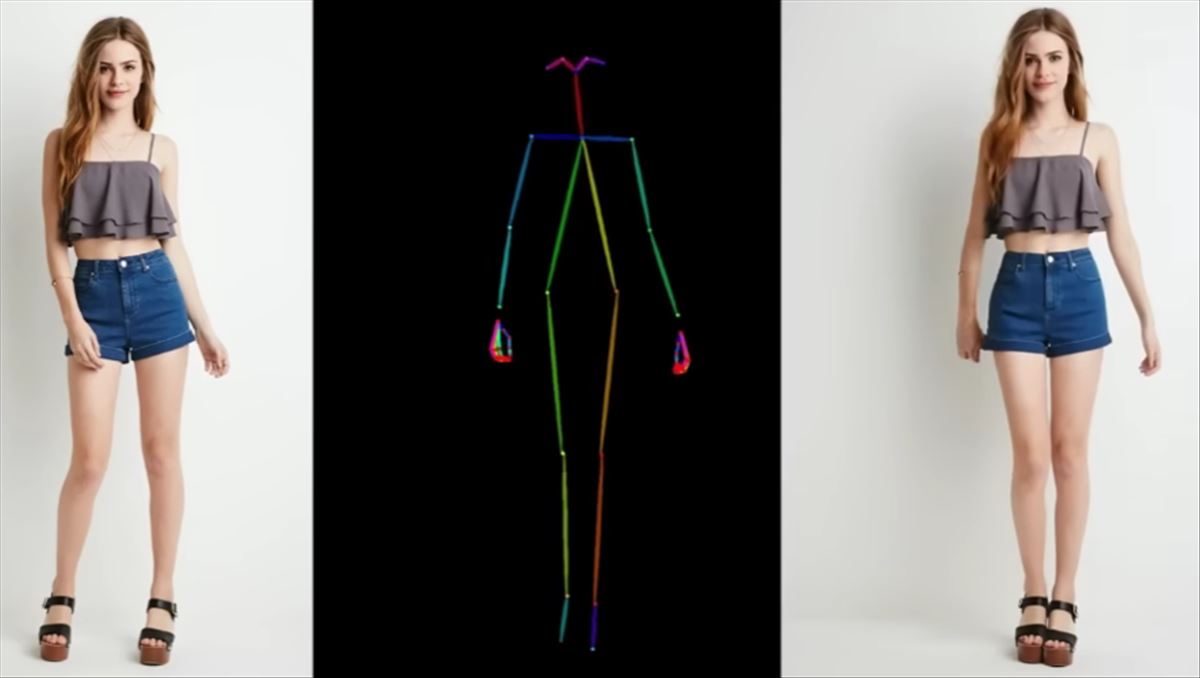

The tool uses diffusion models, an advanced technique in visual generation. This method focuses on maintaining temporal consistency and detailed information when converting images to video. What’s interesting here is how the minute details of the images are preserved during the animation. Watch the video:

What really sets ‘Animate Anyone’ apart is the ReferenceNet. This system fuses detailed features of reference images while maintaining appearance peculiarities. It is as if the image comes to life while maintaining its original essence, which is fascinating.

To achieve natural movements and smooth transitions, a pose guide is used. Imagine directing an actor in a scene; This is how this component works, ensuring that each movement is smooth and controlled.

A crucial aspect in animation is the transition between frames. This is where temporal modeling comes in, ensuring there are no jumps or inconsistencies. It’s like watching a movie where each scene flows perfectly into the next.

The arrival of ‘Animate Anyone’ could be a turning point for content creators on platforms like Instagram and TikTok. With the ability to create detailed videos from a single image, traditional content creation methods could be challenged. This tool opens a range of possibilities for creating videos. From complex animations to 360-degree spins, all from one image. This is great news for some creatives and marketers, and terrible news for others in the same sector who have specialized in what this tool does automatically.

Although there’s no release date yet, the Alibaba team is working to make ‘Animate Anyone’ more accessible, going from an academic prototype to something we could all use. Expectations are high, and many of us are already waiting to try it.

You have the project on Github.