Google search engine is becoming popular with it’s voice search. With the popularization of smartphones came the popularization of tools like Shazam, which allow you to recognize songs by listening to a small fragment. This however has never completely solved the problem of having a song in mind but not a real audio fragment to be able to recognize it. Google claims to be able to fix it, its AI now recognizes songs by humming them, whistling them or trying to sing them in some way or another.

In an event related to Google searches, the company has announced different news related to its search engine and how it has improved and will continue to improve soon. One of the most curious functions is probably the one that Google has announced regarding the detection of songs. They say that now the search engine will be able to recognize songs by humming them.

Google Search Engine: Singing, whistling and humming

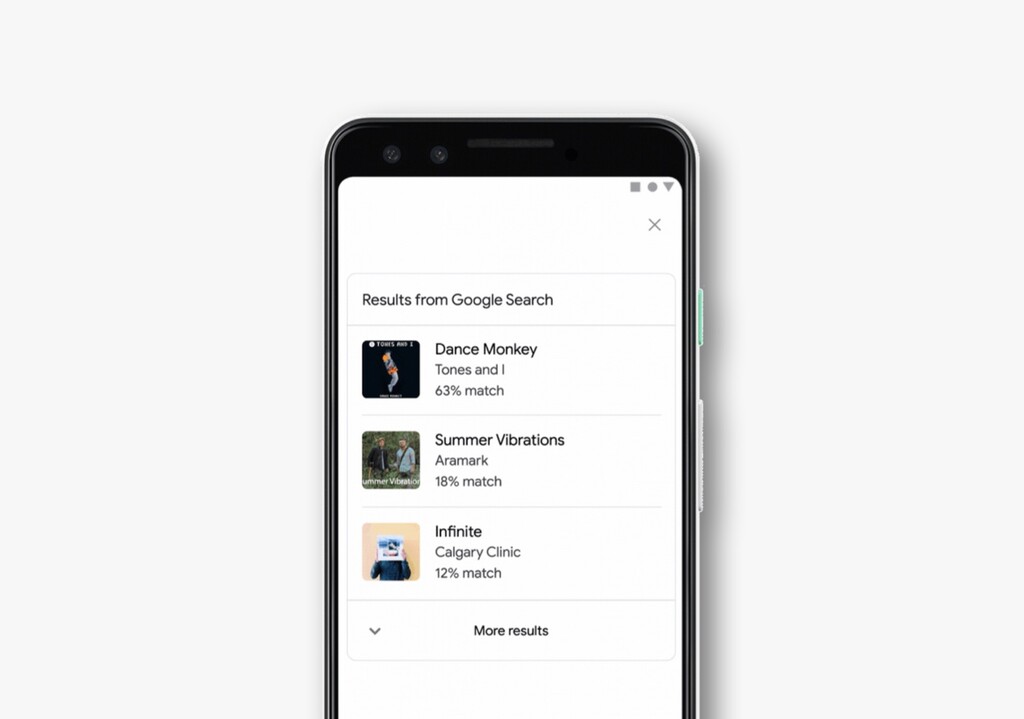

The new functionality is available today in the Google application for smartphones and in Google Assistant . Just ask Google “what is this song?” (or derivations of this question) and then hum, whistle or sing it. Google will show different results ordered from highest to lowest probability of being the song you are looking for. From there it is possible to listen to the song to see if it is indeed that.

How does Google do this? As they comment, they use a machine learning model that turns what is hummed or whistled into a “number sequence”. After that it is a matter of comparing said numerical sequence with the existing and already scanned song sequences. The more the two sequences match, the more likely it is to be the song you are looking for.

They say they have trained the learning model based on people singing, whistling and humming. Also, it does not take into account the instruments or the vocal quality of each user. It is the difficulty and challenge of these functionalities of the Google search engine: understanding the human being when he is most human . That is, get closer to natural language and not depend so much on specific data being received. Like when you understand what we mean by “Aguanchu bi fri”.

The functionality is available from today on Android and iOS through the Google app, as well as the Google Assistant. It is not limited to English but is available in more than 20 languages depending on the company. Soon it will come to more.