Today we are going to take a look at the history of NVIDIA to find the graphics cards that have given the best and worst results . There is no doubt that there will be models with some controversy.

All companies have their failed products, but we are going to break down which have been the worst and best GPUs of the “Green Team” in order to remember old times. Surely some of you had the same model or another similar one, and it is that we do not “just stoke NVIDIA”, but we highlight the most mythical custom models.

The worst graphics cards in NVIDIA history

We start with the worst part of NVIDIA, a company that has stumbled in its early days until it achieved stability based on successes. However, it is worth taking a look at the worst graphics cards.

nVidia NV1 (1995)

Launched in 1995, it was a GPU that had its controversy due to its price and qualities, but especially due to the use of quadratic surfaces : at that time the representation of polygons was better. What’s more, many users had problems with the audio, reviewing that it was mediocre.It powered the Sega Saturn, a console that was a failure, if you take into account other consoles of the time.

And the problem with working with quadratic surfaces was that it was compatible with few games, no more than 10 in total. The only asset she had was that we could play Saturn games with her.NVIDIA developed an NV2 chip for an evolution of said graphics, but the developers and SEGA abandoned their funding. In the end it came to nothing and the NV1 was piling up in stores because nobody was buying them: 3dfx won with its Voodoos (for now).

GeForce 5800 (2003)

We are referring to the GeForce FX 5800, high-end graphics cards that represent the fifth generation of GeForce and that came with the GeForce FX architecture. We incorporated it into this compilation of NVIDIA graphics cards from worst to best because its performance in Shader Model 2 was low, this GPU was a machine to create dust and it was controversial because of its noise, it sounded like a demon!

In terms of performance, the controversy came about the technique to raise the FPS, which was not satisfactory at all. This GPU was so disappointing that NVIDIA released the FX 5900 Ultra to fix the mess: quieter and a slight performance boost over the Radeon 9800 Pro that didn’t use Shader Model 2.0.

Finally, the FX 5800 Ultra was disappointing because the Radeon 9700 Pro was better and on top of that it didn’t make as much noise.

9800 GTX (2008)

Little praise received for the 9800 GTX, a graphics card that was very high priced and not much better than the previous generation. In fact, when compared to the 8800 GTX, it could even surpass it in a specific title, something inadmissible!Keep in mind that ATI and NVIDIA released new generations of graphics cards annually: every year, a new family.

Now, the reality has changed because we see a new generation every 2-3 years or so. The point is, NVIDIA’s Generation 200 GTX smashed the 9000 series GPUs.To make matters worse, the Radeon 4890 came with variants 1 GB and 2 GB of VRAM, while NVIDIA had opted for 512MB GDDR3 in its GTX.

GTX 480 (2010)

Opinions are divided here: there are people who say it was disappointing and other people have good memories of the GTX 480. We are in the generation with Fermi architecture, which was criticized for how the graphics cards were overheating.

They were compared with the AMD Radeon HD 5000 (AMD had bought ATI) and it is that there were many who complained about its low performance, high power consumption and scandalous temperatures. What’s more, they made memes about it and AMD users were very satisfied with their HD 5000 (which was very good).

Geforce GTX 590 (2011)

The NVIDIA GTX 590 had big problems and they were not a great product, and it was a dual GPU whose consumption and temperatures were monstrous. There are several videos on YouTube showing how the GTX 590 burned and broke, a sign that it was not sufficiently tested at the time.Without a doubt, in a ranking of NVIDIA graphics cards from best to worst this has to appear on the negative side.

ASUS GT 640 y GTX 660 (2012)

The problem with this generation was that they were too expensive GPUs for what they offered in exchange, or so the public thinks. They carried Kepler architecture, which was born to improve the efficiency that Fermi had smashed. NVIDIA had improved many aspects related to the loading of textures in Kepler, even increased the memory frequency.There was a lot of complaint with the price of the GeForce 600 because they did not offer a huge power, but here we see a division of opinions again, could it be that AMD was doing it well?

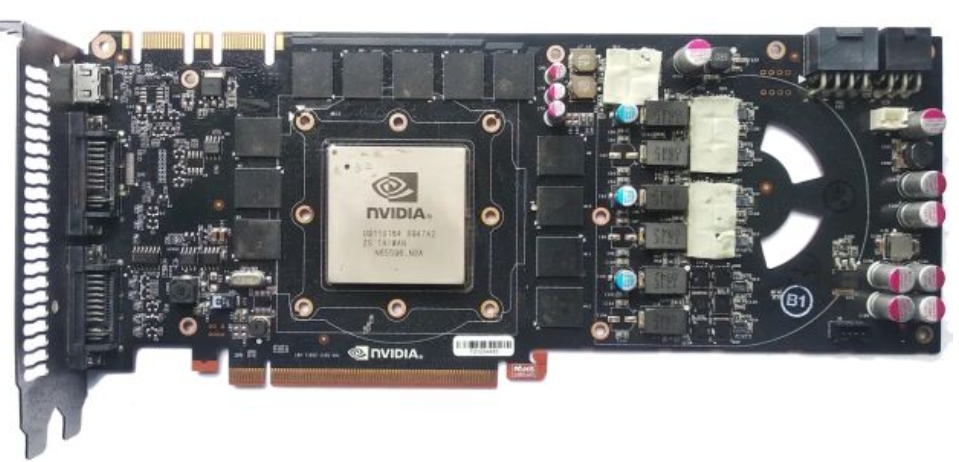

GTX 700 (2013)

The GTX 700 were not bad graphics cards, the problem was that it was a mere re-fresh of the GTX 600 in which energy efficiency was improved and power was increased a bit. They used an update to the Kepler architecture, but the GTX Titan or Titan Z of that era stood out as “the beast.”Why do we put it as a bad GPU? Because the GTX 780 was obsolete in just 1 year, and it was a GPU that cost around $500. To give you a more current idea, it was a GPU.

The best GPUs in NVIDIA history

We return to normality, and it is that the general line of NVIDIA is to make very good graphics cards at an unpopular price, but that is still the 1st option for most users. We go with the graphics cards that have landed the best, leaving behind those that convinced the worst.

Riva 128 (1997)

Known as NV3, the RIVA 128 was released in 1997 and saw NVIDIA redeem itself from the NV1; in fact, there was a more powerful variant called the RIVA 128ZX, which came out a year later. NVIDIA took a close look at Microsoft and its first version of DirectX, so it released the Riva 128 to take advantage of all the benefits of this API.To say that it had a 2x AGP support, but its rivals used 1X in PCI or AGP, so its biggest asset was its performance in 3D and 2D, very good!

It was not long before it was compared to the Voodoo 3dfx, being despised for its rendering quality and for certain graphical errors.However, many ranked it above 3dfx GPUs.

GeForce 256 y Geforce 2 GTS (1999)

In our NVIDIA history entry, we broke down this graphics card as the first “GPU ” in the company’s history. We came from the RIVA TNT2 and the GeForce 256 increased its performance by 50%, coming with 32MB and 64MB DDR.The GeForce 2 GTS was launched in 2000, the very year that NVIDIA bought 3dfx and absorbed all its intellectual property (the famous SLI). The GeForce 2 GTS had twice the TMUs of the 256 and came with 3 different GPUs as variants. The novelty was that it supported several simultaneous monitors.

GeForce 6800 Ultra (2004)

Remembered with much nostalgia by many, NVIDIA was making up for a controversial generation (5000) and the 6800 Ultra topped the high-end with 256MB GDDR3 at 1.1GHz. It was a GPU that made use of PCIe 1.0 x 16 and that was leader in its range because ATI did not offer much competition in high ranges.To say that the GeForce 6800 came with up to 512MB GDDR3, 256-bit bus and was twice as fast as the FX 5950 Ultra, what a bug! Of course, it was not cheap and it was between

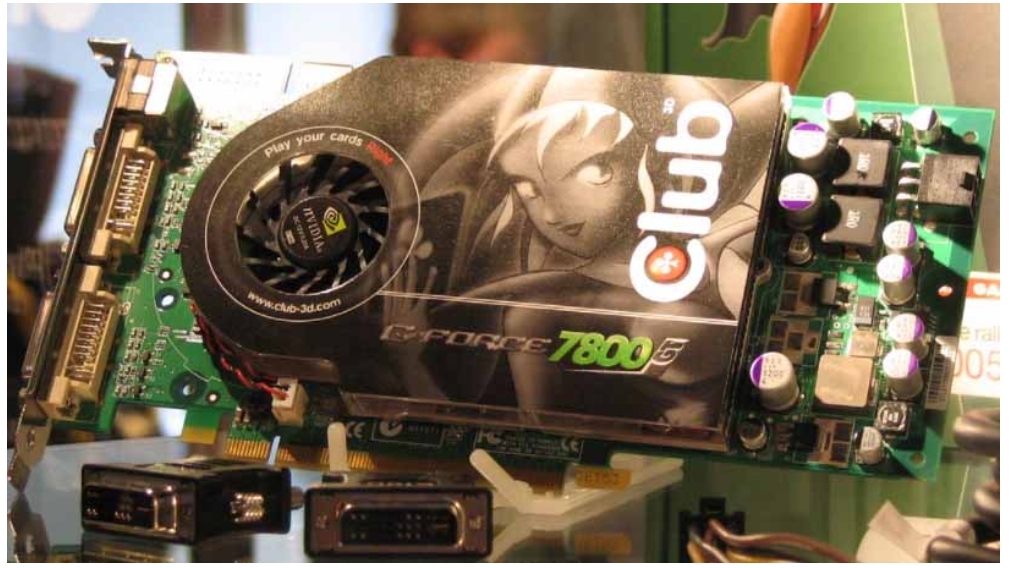

GeForce 7800 GTX

The NVIDIA 7800 GTX is part of the first ” GTX ” range in the history of the green brand and it is that it left the 6800 in a simple anecodote, especially after releasing a variant of 512 MB with better temperatures and lower latency than its predecessor.It is difficult to meet users who have been disappointed with this graphics card, as NVIDIA has always been dominant in the high end of each generation.

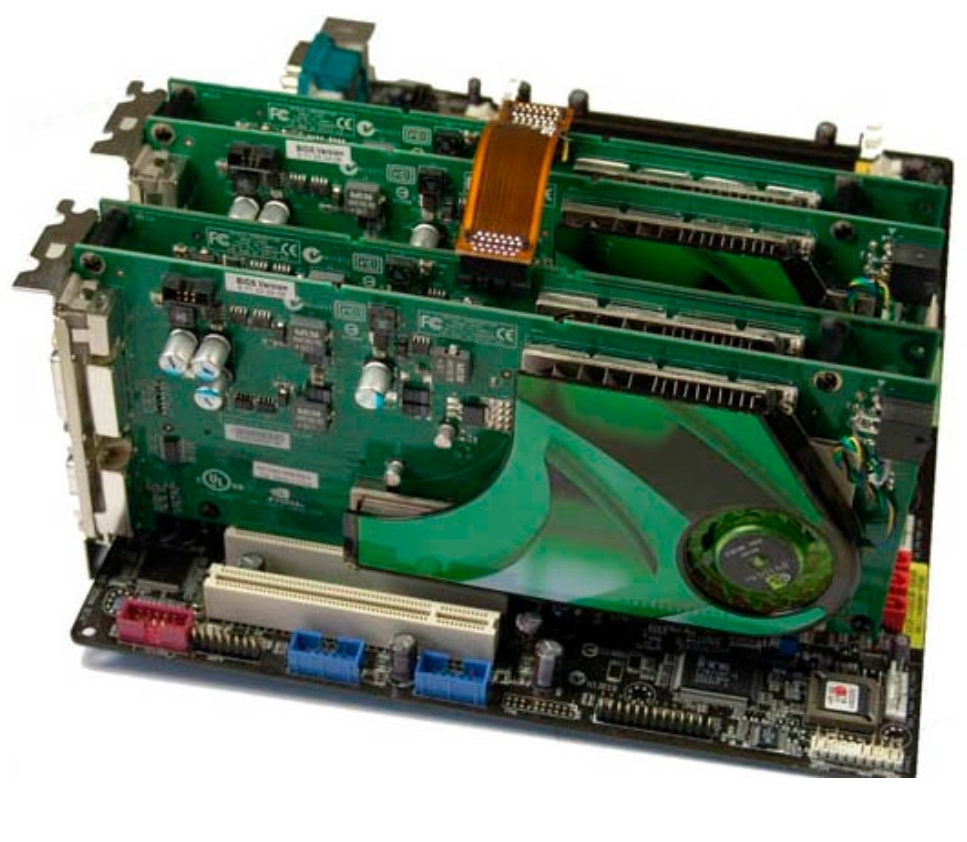

GeForce 7950 GX2 (2006)

We are still in the same generation, only we have “a GPU” that is a beast: there are 2 GPUs in 1. Basically, there were 2 7800 GTX because this GPU equipped 2 G71, had 1 GB GDDR3 and could be played at 2560 x 1600 with her, in 2006!Its price oscillated around $600, which was quite high for that time, as was its consumption: there are 2 GPUs to feed. It was a graphics card beast.

GTX 280

We also had the GTX 295 as an emblem of that time, but I have to say that the GTX 280 was a very great graphics card. And is that the GTX 200 series came with the architecture ” Parallel Computing ” or “parallel computing”, not to mention the improvements at the GPU level that it brought. This architecture laid the foundation for the next ones that NVIDIA developed, so we can’t forget about it.

Interestingly, there was talk of Stream Processors, which are the chips that power AMD graphics cards. And it is that NVIDIA now uses CUDA Cores as the pillars of its chips: the more CUDA Cores, the more power (although it does not only depend on this).

In this generation there were many SLI configurations, something that seems to have disappeared from the RTX 2000 onwards. Of course, his story had its gray part when the HD 4870 came on the market, which competed face to face with this NVIDIA.

GTX 980 and GTX 980 Ti

We put on the gala suit because things get serious when talking about the GTX 900. It was a generation based on the Maxwell architecture, which succeeded Kepler and that may be the family of GPUs with the most SLI configurations on the planet. I don’t know of anyone who has had bad experiences with these graphics cards, and in my group there were many with a GTX 970 and GTX 960.

The AMD alternative was not very interesting in value for money and NVIDIA convinced with its graphics cards built by TSMC.Nor did it escape criticism : the GTX 970 did not come with 4GB, but with “3.5GB + 0.5GB”, that is, the first 3.5GB were fast, and if more was needed the performance would plummet. Also, there were discrepancies in the caches, but all the problems pointed to the GTX 970, not affecting the others.

That said, I do not know of any close cases affected by this anomaly and the users I know with said GPU enjoyed “like dwarfs” for 3 and 4 years using them.With all this, we must highlight the queens: the GTX 980 and GTX 980 Ti. While the GTX GTX 970 and 980 mounted GM204 GPU, the GTX 980 Ti used the same as the Titan X. Basically, the differences were in the following:

- One had 4GB GDDR5, while the other went up to 6GB GDDR5 .

- Almost a thousand more CUDA cores difference.

- $ 100 difference .

- Almost 1 year of launch difference (one in September 2014 and another wave in June 2015).

Two thumbs up for NVIDIA, we couldn’t forget about them in this compilation of graphics cards from worst to best.

GTX 1060 and GTX 1080 Ti (2016)

If before we put on the evening dress, now we put on the tuxedo to welcome, perhaps, one of the best generations that NVIDIA has ever released: the GTX 1000. They were based on the Pascal architecture, which demonstrated overwhelming potential over its rivals, as well as outsized performance.

See if they were good graphics cards, that the most used GPU on Steam is a GTX 1060. Specifically, we saw 2 versions : one with 3 GB GDDR5 and another with 6 GB GDDR5. I want to highlight the crisis that occurred in the graphics card sector due to the rise of Bitcoin: everyone bought GPUs, they became more expensive and we saw a 6 GB GTX 1060 for € 400.

In reality, its price was between € 280 and € 350, being a GPU that offered a performance in 1080p at 60 FPS more than enough. Not to forget the 8GB GTX 1070, which was also a great product because it had no rival in its range, although its price was less popular.But, we have to talk about the GTX 1080 and 1080 Ti : the crown jewels.

Specifically, the GTX 1080 Ti was the first GeForce desktop GPU to equip 11 GB GDDR5X, which was outrageous in 2016. It is a GPU that is still widely used and was the subject of numerous mining purchases because its performance was brutal.Here people started talking about 4K, but there was still a lot of development and power to come.

RTX 2070 Super y RTX 2080 Super (2018)

Ray Tracing arrived, a technology that NVIDIA wanted to excite in 2018, and behind it all was the Turing architecture and the RTX 2000. I have not found anyone dissatisfied with a GPU of this family, but NVIDIA left its Ti variants to make way for the SUPER: very interesting vitaminized versions.

NVIDIA had to rival the RDNA and the RX 5000 a short but intense generation that had no option for the high-end. NVIDIA saw the RX 5700 XT perform brutally (without RT) and was at odds with the RTX 2070, so they released the RTX 2070 Super and that’s it.We are talking about a time when playing in QHD (1440p) is a normality, while NVIDIA DLSS emerges as an essential technology to make Ray Tracing viable.

If we have to highlight any model, it is the RTX 2070 Super and the RTX 2080 Super.True, NVIDIA offered its RTX 2080 Ti variant, but it was absurdly expensive for what it offered compared to the Super. I am just a user of the RTX 2070 Super and I am delighted with it, it is a super interesting GPU that does what it promised.

Regarding the RTX 2080 Super, we saw a custom model for about € 600-700 on sale, which was a “bargain.” What beautiful times, right? This GPU can handle tons of games in QHD at 144 FPS, ideal for 1440p monitors at 144 Hz .

RTX 3080 and RTX 3090 (2020)

We finish with 2 atrocities for graphics cards: one offers versatility and the other “laughs” at 4K, flirting with 8K. In 2020, a pandemic year, Ampere arrives: the RTX 3000 with 2nd generation Ray Tracing, 8nm process by Samsung and a 4K performance that borders on 144 FPS in many current AAA games.

Both come with groundbreaking GDDR6X memory : the RTX 3080 with 10GB GDDR6X ( 12GB for the RTX 3080 Ti) and the RTX 3090 with 24 GDDR6X. In all the comparisons that I have dealt with, I have said it and I have stayed: the RTX 3080 is the most versatile GPU of the last generation. The RX 6000’s are great too, but Ray Tracing detracts from them because their FSR isn’t as polished as NVIDIA’s DLSS.

Ampere’s only penalty is the semiconductor crisis and the high price of graphics cards because there is not enough stock to supply demand. Unfortunately, this series has been overshadowed by this event, in addition to a very high energy consumption. A very powerful power supply is required for an RTX 3080 or 3090 .