Barely a year ago OpenAI announced the launch of DALL-E 2, an artificial intelligence system capable of generating amazing images from our requests (‘prompts’) in text. Since then we have seen how this engine and its competitors — Stable Diffusion, Midjourney — have been gaining performance, but now the proposal has gone one step further.

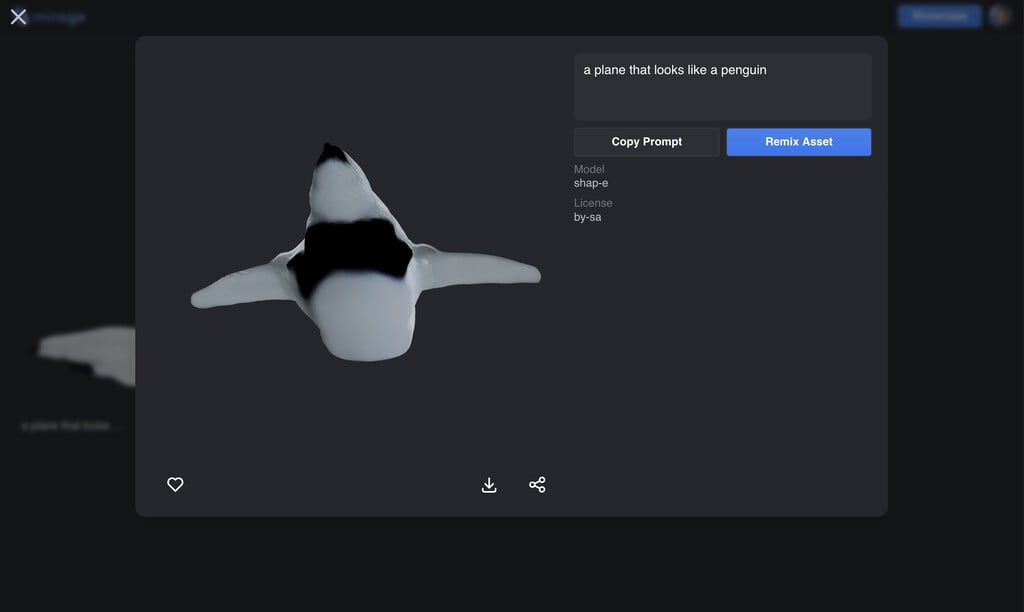

Make me a 3D model. Haweoo Jun and Alex Nichol, OpenAI researchers, recently published a study on Shap-E, a conditional generative model of 3D objects. There were already models of this type, but they generated a single representation. Shap-E goes further, and generates the parameters of implicit functions that can be rendered as texture networks or as the so-called NeRFs (Neural Radiance Fields), which gives much more possibilities to 3D designers.

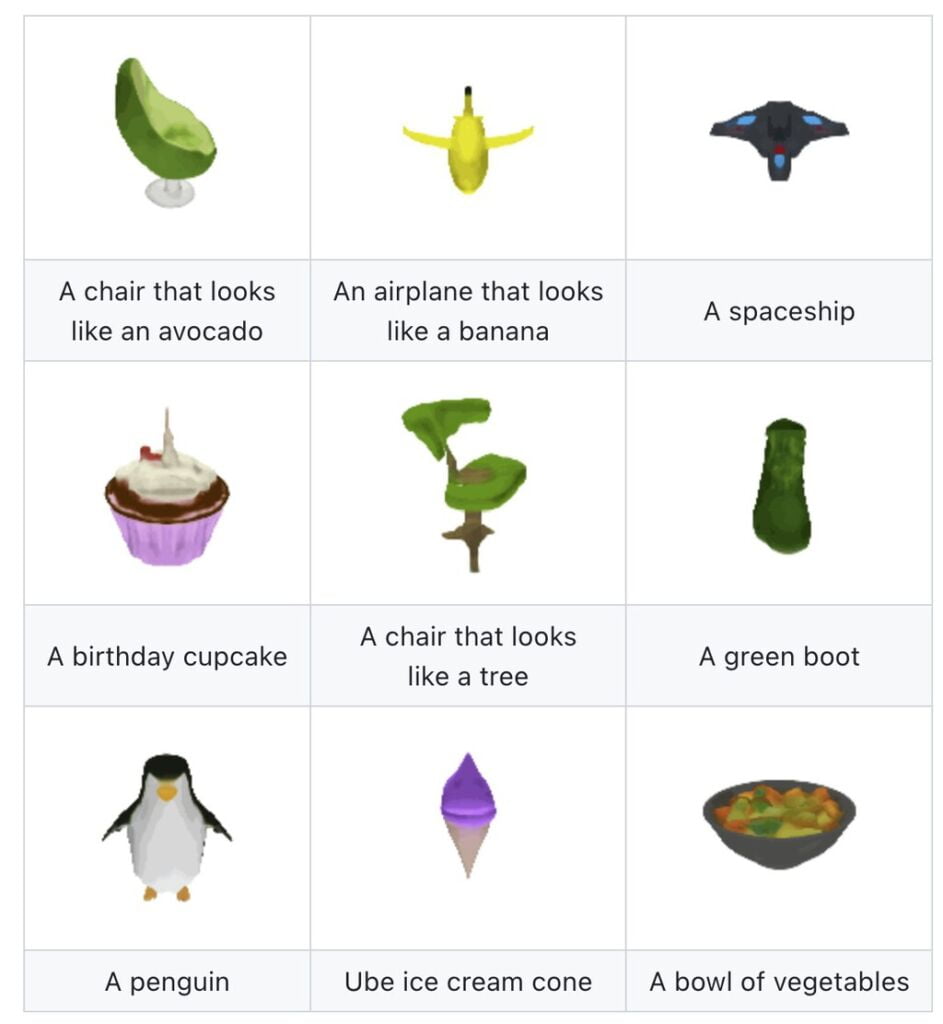

A good start. This generative AI model — whose code is available on GitHub — is still somewhat modest in its results. There is no need to expect the hyperrealism that we have already seen in Unreal Engine 5 here, and we are facing an initial version of an engine that undoubtedly has a lot of room for improvement. Even so, it is capable of transferring a text prompt to a 3D image with results that, even though they are of low resolution and definition, are promising.

Computationally expensive . As it happened with the generative AI models that initially worked by creating texts or images, the computational cost of using this type of technology is high. Shap-E takes about 15 seconds to generate one of these models on an NVIDIA V100 (about $4,000), which makes the practical application of this model difficult to carry out today.

A powerful tool for 3D designers. Just like models like DALL-E 2, Stable Diffusion or Midjourney have become a unique tool for designers and artists, Shap-E and its future alternatives are postulated as a powerful helper in the field of 3D design.

Now you can try it. Modeling 3D objects is a complex and intensive task, but with this type of model a first draft could be obtained on which the artist could then work, saving part of the initial work. It is possible to test Shap-E through the Mirage platform.

Hence the 3D printer. However, it is not difficult to imagine a future in which this type of generative AI no longer offers 3D models as a result, but rather offers 3D models ready to be printed on 3D printers. Suddenly “sculpting” any type of object by entering the appropriate prompt seems much more feasible.